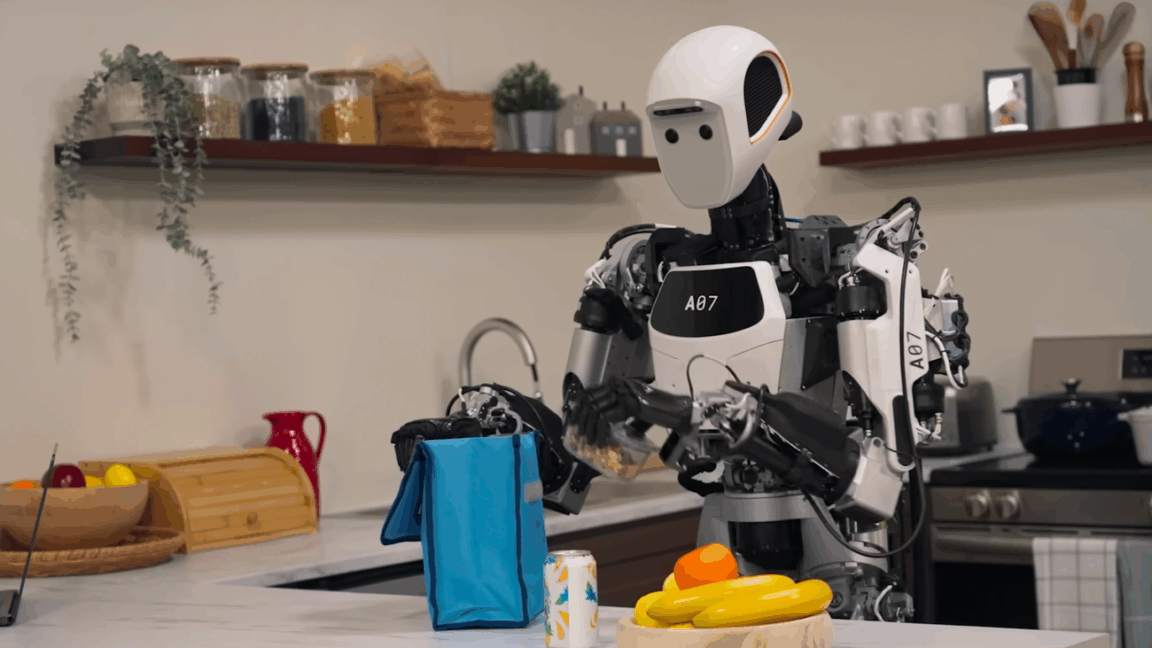

Google releases a new Gemini Robotics On&Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations (Ryan Whitwam/Ars Technica)

Ryan Whitwam / Ars Technica: Google releases a new Gemini Robotics On-Device model with an SDK and says the vision language action model can adapt to new tasks in 50 to 100 demonstrations — We sometimes call chatbots like Gemini and ChatGPT “robots,” but generative AI is also playing a growing role in real, physical robots.